We will score a test on Quadratics as 90%, score a test on rational functions as a 40% and then average those two scores to a 65%. Throw in a couple missing homework assignments and it's a failure. Add a bunch of homeworks handed in (100% each, weighted average), another test on square roots (80%), "Participation points" for having a pencil every day and not being an asshole, and some "extra credit" for a well-done project on exponential functions that was mostly a rehash of something done in Algebra I, and now this is a C+ or a B grade. If it's 79.43%, then it's a C+.

How in the name of Cthulhu can we be that accurate?

How in the name of Cthulhu can we be that accurate?- Why does having a pencil raise your grade?

- Why does missing homework lower your grade?

- How does "extra credit" on one topic cover the fact that you don't know what you're doing on a second or third topic?

Can't solve an equation, can't find asymptotes or holes, can't factor quadratics if a != 1, can't determine the missing terms in an geometric sequence ... but can grub points here and there, and "Boy, he's trying really hard and he deserves to get a few extra points so his grade is above 80."

How can you assure the Pre-Calculus teacher that this kid is ready for it? How about the college professors who are constantly droning on about freshmen in remedial math classes?

Look at those standards. Sure, they're all about working with quadratics in some form or other, but skill in N.CN.2 does not equate to skill in A.SSE.3 or in F.IF.7. So how does the good grade we get in part of this "help raise" the poor grade in another?

Look at those standards. Sure, they're all about working with quadratics in some form or other, but skill in N.CN.2 does not equate to skill in A.SSE.3 or in F.IF.7. So how does the good grade we get in part of this "help raise" the poor grade in another?Shouldn't we be asking for skill and understanding in each of these? Don't we want proficiency (to some standard) in all of these before we say "Algebra 2" on a transcript?

And so we arrive at Proficiency-Based Grading.

At its ideal, it's perfect.

- List all the proficiencies.

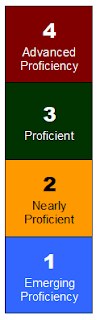

- Set up a scale: Proficient w/Distinction, Proficient, Nearly Proficient, Emerging Proficiency.

- Assess: Decide the rubric/scoring method, be consistent, ignore the names, begin.

Repeat to the students, "There are ten things you need to know before you can say 'I understand Algebra 2' and can take that to the next course."

Ah, but this is education, and now we need to "fix" things.

First, having only ten grades in the gradebook is not going to cut it with secondary level administrators. You need to include all of your formative and summative assessments.

Then, because we have to use PowerSchool, we need to list all of the standards for math, even if we are only focused on those 10 for this course. The other math teachers need their 10 things, and Powerschool can't be configured differently for each course ... blah, blah, blah. Probably it can, but the tech people and the curriculum coordinator can't figure it out, so fuck you.

Every column needs a grade, so the pilot teachers enter E for everything not covered in Algebra 2. (That's a lot)

Parents immediately complain that there are all these Es, "Why is this?" So we make a fifth category, "N/A, Haven't done this yet."

People who should know better insist that everything have a numerical value. So we label the levels 1, 2, 3, 4 (and 0 for the Haven't Done it Yet" category) ... and PowerSchool promptly averages the scores.

That's right, it takes the old problem of averaging things that have nothing to do with each other and magnifies it by averaging Ordinal Data of things that have nothing to do with each other.

"Advanced Understanding of Adding and Subtracting complex numbers" combined with "Nearly Proficient in Graphing Functions" somehow equates to 3, Proficient.

Not only that, but if you have something like N.CN.2 which you have determined to be only a 1, 2, or 3 scale ... well, your students are going to be shocked when they can't "get a 4" for the course.

|

| XKCD: 937 |

Then there's accuracy. How accurate is that 3 or 4, anyway? The teachers who piloted this program in the other building began to think that "this 3 is different from that 3; I want to show progress" and promptly began to use halfs.

Then came re-takes. If I give a ten-question assessment of N.CN.2, and a student is deemed nearly proficient on those ten questions, does that mean the student is proficient? Probably not. Let's test him again. He takes four more tests over the next few days, scores proficient all four times. His average is less than 3. To be exact, 14/5 = 2.8

So we compensate by telling PowerSchool to "take the most recent four scores" every time, thinking that we want to see improvement. The kid who scores 4 because he absolutely understands it and can use this knowledge to write a computer program to run a Lego MindStorm robot to draw the function on the hallway floor, still has to take the test three more times so PowerSchool can find an average of the "most recent four". And, just to be funny, he scores 4, 4, 4, and 0, and lets PowerSchool average that to "Proficient".

Remember that comment about needing all of the formative and summative assessments? Formative work is simply assignments and quizzes that help your students learn. They try, and fail, then try again. You need to assess this work, but it doesn't count. All you want here is "Does the student understand N.CN.7?"

I said that formative be recorded but be worth 0% - enough to be noticed but not enough to matter. Of course, telling admin that something won't count means they assume that students won't do it, so it has to count. Those "in charge" at my school decided formative was 25% of the grade, summative 75%.

Thus, formative scores of 1, 2, 2, 1, 2, and 3 (because the student is still learning) and then summatives of 3, 3, 3, and 3 (all proficient, meaning this kid understands this topic) will result in a final mark of 2.7 (nearly proficient).

How does this make sense? It doesn't.

I'm certain that many of you are saying "Hey, the old way did most of this, too?"

Yes, Mr. TuQuoque.

My point is that we should have Proficiency-Based Grading without these pitfalls. If PowerSchool can't do it properly, then you should stay with the old grading methods until you get a proper gradebook.

Warning Signs that you're Doing it Wrong:

- You find yourself using 3.5 because the student is "More Proficient than just Proficient" but not quite "Advanced Proficient"

- You average what shouldn't be averaged.

- You let the learning process alter the proficiency measurement.

- 90% of the marks in your gradebook are 0 because those standards aren't in this course.

- Workarounds of any kind in PowerSchool.

We are trying that too. We have tossed "halfs" but they want to get rid of 1, 2, 3 and 4 and go to ABC because "the parents don't understand."

ReplyDeleteMy response is "I can explain it to anybody in four minutes, they don't want to understand."

Thank you for consistently and clearly laying out the major problems with the systems provided to us to try to meet the ever changing standards.

ReplyDelete